Bottom Up Top Down Detection Transformers for Language Grounding in Images and Point Clouds

Abstract

Most models tasked to ground referential utterances in 2D and 3D scenes learn to select the referred object from a pool of object proposals provided by a pre-trained detector. This is limiting because an utterance may refer to visual entities at various levels of granularity, such as the chair, the leg of the chair, or the tip of the front leg of the chair, which may be missed by the detector. We propose a language grounding model that attends on the referential utterance and on the object proposal pool computed from a pre-trained detector to decode referenced objects with a detection head, without selecting them from the pool. In this way, it is helped by powerful pre-trained object detectors without being restricted by their misses. We call our model Bottom Up Top Down DEtection TRansformers (BUTD-DETR) because it uses both language guidance (top down) and objectness guidance (bottom-up) to ground referential utterances in images and point clouds. Moreover, BUTD-DETR casts object detection as referential grounding and uses object labels as language prompts to be grounded in the visual scene, augmenting supervision for the referential grounding task in this way. The proposed model sets a new state-of-the-art across popular 3D language grounding benchmarks with significant performance gains over previous 3D approaches (12.6% on SR3D, 11.6% on NR3D and 6.3% on ScanRefer). When applied in 2D images, it performs on par with the previous state of the art. We ablate the design choices of our model and quantify their contribution to performance.

Video

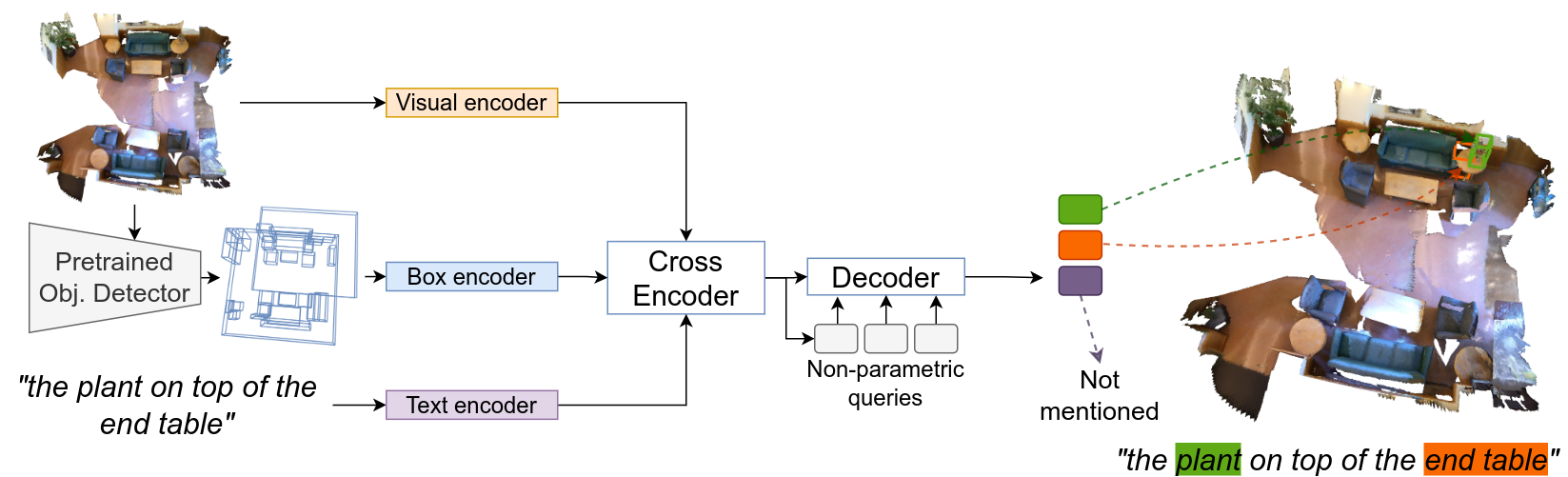

Method: BUTD-DETR (pronounced Beauty-DETR)

Given a 3D scene and a language referential utterance, the model localizes all object instances mentioned in the utterance. A pre-trained object detector extracts object box proposals. The 3D point cloud, the language utterance and the labelled box proposals are encoded into corresponding sequences of point, word and box tokens using visual, language and box encoders, respectively. The three streams cross-attend and finally decode boxes and corresponding spans in the language utterance that the decoded box refers to.

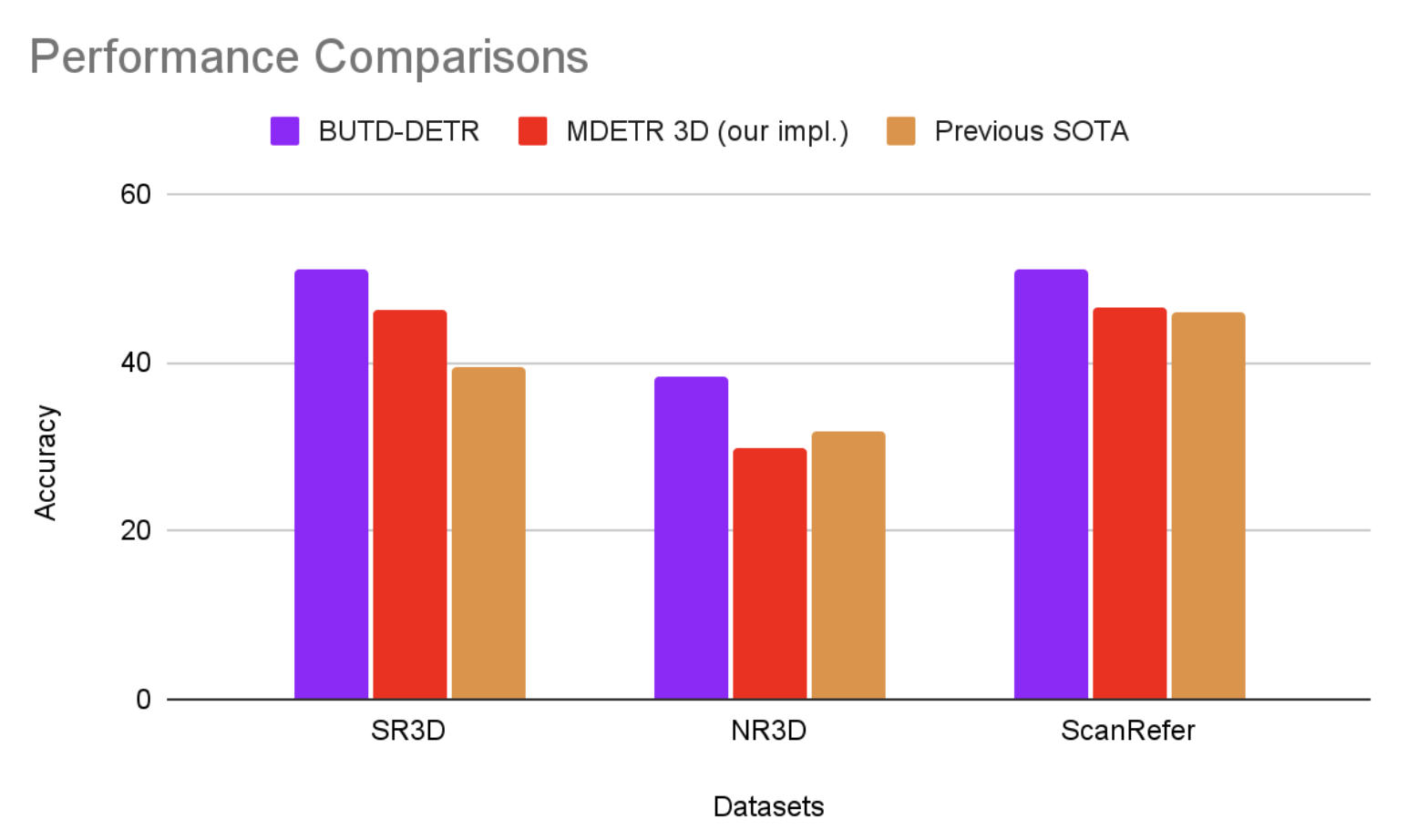

Quantitative Results

BUTD-DETR achieves state-of-the-art performance on 3D language grounding, outperforming all previous models as well as an MDETR-3D equivalent that we implemented.

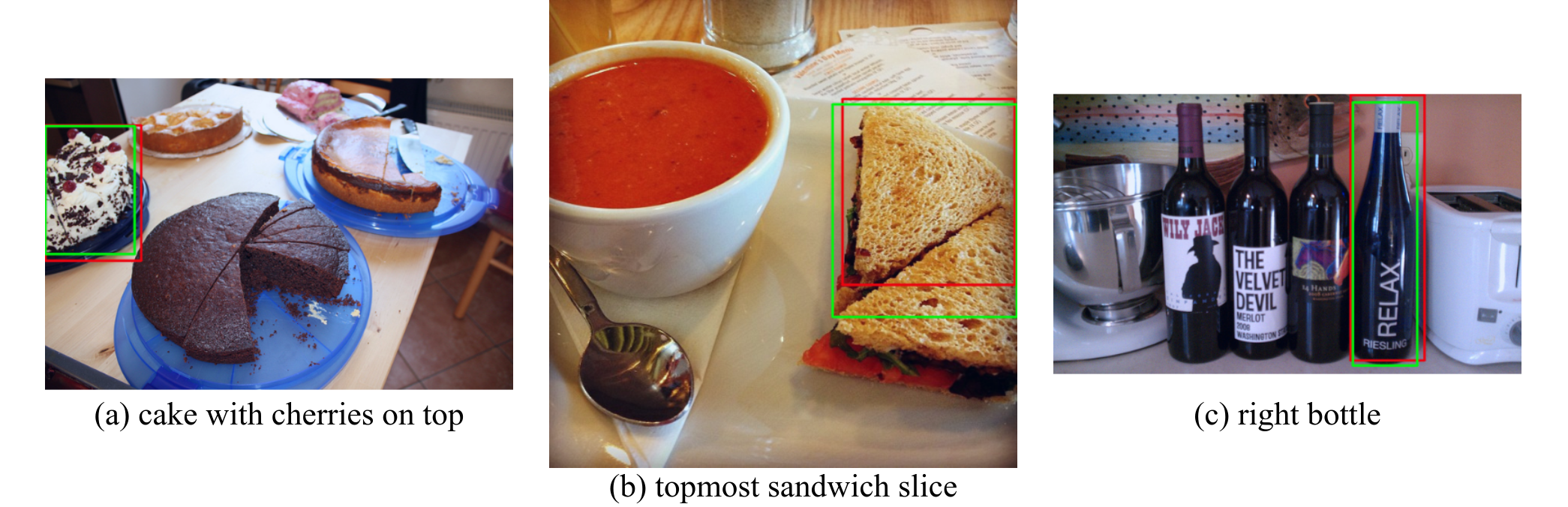

BUTD-DETR works on 2D domain as well achieving comparable performance to MDETR, while converging twice as fast, due to architectural optimizations such as deformable attention. With minimal changes, our model works both on 3D and 2D, thus taking a step towards unifying grounding models for 2D and 3D.

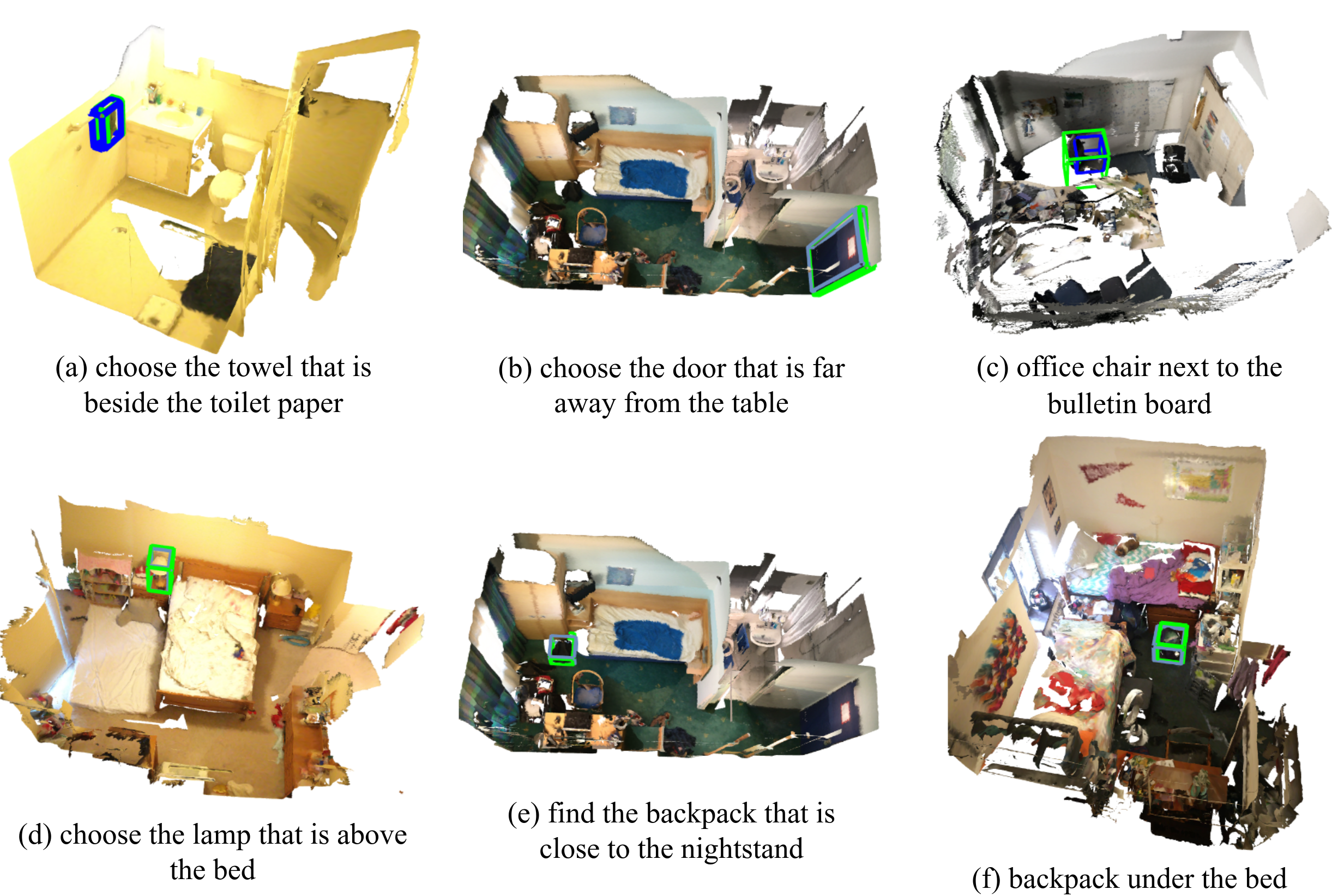

Qualitative Results: 3D

Qualitative Results: 2D

Paper and Bibtex

@misc{https://doi.org/10.48550/arxiv.2112.08879,

doi = {10.48550/ARXIV.2112.08879},

url = {https://arxiv.org/abs/2112.08879},

author = {Jain, Ayush and Gkanatsios, Nikolaos and Mediratta, Ishita and Fragkiadaki, Katerina},

keywords = {Computer Vision and Pattern Recognition (cs.CV), Computation and Language (cs.CL), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Bottom Up Top Down Detection Transformers for Language Grounding in Images and Point Clouds},

publisher = {arXiv},

year = {2021},

copyright = {Creative Commons Attribution 4.0 International}

}